TL;DR

-

Zero-knowledge proofs allow AI models to be audited without revealing sensitive data or the internal logic of algorithms.

-

Zero-Knowledge Machine Learning offers a way to verify that systems do not discriminate without sacrificing privacy or intellectual property.

-

Recent advances have improved the scalability of these proofs, bringing them closer to viable adoption in high-demand machine learning environments.

In recent years, the debate around the fairness of artificial intelligence (AI) has gained ground — and for good reason. As algorithmic systems penetrate essential aspects of everyday life — such as access to credit, job opportunities, or even search engine results and image generators — the possibility of them operating with bias is no small matter. The challenge lies in how to audit and guarantee that these models are genuinely fair, while avoiding sacrificing users’ privacy or the intellectual property of the companies developing them.

The Potential of Zero-Knowledge Proofs

Zero-knowledge proofs (ZKPs) are emerging as a promising solution. While this concept originally surfaced within the crypto ecosystem as a tool to validate transactions without revealing sensitive information, its potential goes far beyond blockchain. Today, ZKPs can be applied to effectively and privately confirm that machine learning (ML) models do not discriminate against people based on factors such as race, gender, or socioeconomic status.

How Zero-Knowledge Machine Learning Works

The problem of bias in AI is not new. There have been cases of credit scoring engines rating individuals based on their social environment, or image generation systems reproducing stereotypes. This demonstrates that algorithms can amplify existing inequalities. Detecting these flaws is easy once the damage is done. The real difficulty is ensuring they don’t occur in the first place and, even more so, proving this to third parties without compromising sensitive data.

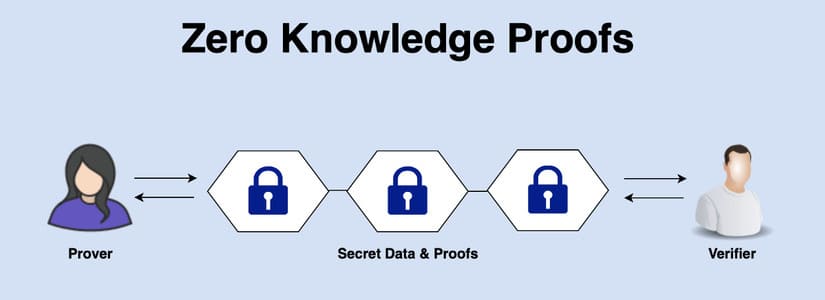

That’s where the combination of zero-knowledge proofs with AI comes into play. This novel solution, known as Zero-Knowledge Machine Learning (ZKML), makes it possible to verify that a model behaves according to certain criteria — such as not discriminating by protected attributes — without needing to expose either the training data or the internal logic of the algorithm. In practice, this means an entity could demonstrate that its loan approval system neither privileges nor penalizes any demographic group, all without revealing how the model works or what information it handles.

The Scalability Challenge

For a long time, the main obstacle for this technology was its limited scalability. Early implementations could only audit one specific stage of the machine learning process, leaving room for manipulations before or after that point. Additionally, processing times were so high that they were unfeasible for real-time applications. However, recent advancements in zero-knowledge proofs frameworks have expanded their reach to models with millions of parameters, achieving verifiable and secure results.

What Does “Algorithmic Fairness” Mean?

A key point in this debate is what we mean by “algorithmic fairness.” There are various definitions. For example, demographic parity seeks to ensure that all groups have the same probability of obtaining a certain outcome. Meanwhile, equality of opportunity proposes that those who are equally qualified receive the same chances of success, regardless of their background. And predictive equality aims for prediction accuracy to be similar across all groups. These metrics don’t always align, but they provide objective parameters for evaluating models.

Regulation and Political Priorities

Beyond technical considerations, this debate takes place within an increasingly demanding regulatory framework. The United States and other major economies have issued guidelines to control bias in AI use, although priorities vary depending on political cycles. While some administrations promote policies aimed at outcome equity, others prioritize equality of opportunity. This creates a constant tension around how to define and ensure fairness in automated systems.

A Tool for the AI of the Future

One of the most interesting advantages of zero-knowledge proofs is that they can adapt to any regulatory definition without compromising privacy or corporate competitiveness. They enable models to be audited continuously and autonomously, reducing reliance on manual reviews or state interventions that often arrive too late.

That artificial intelligence already intervenes in decisions that affect real lives is a factual reality. Having transparent, secure mechanisms to verify its behavior has become essential. Zero-knowledge proofs, born at the heart of blockchain technology, could become the standard for fair, trustworthy, and privacy-respecting artificial intelligence