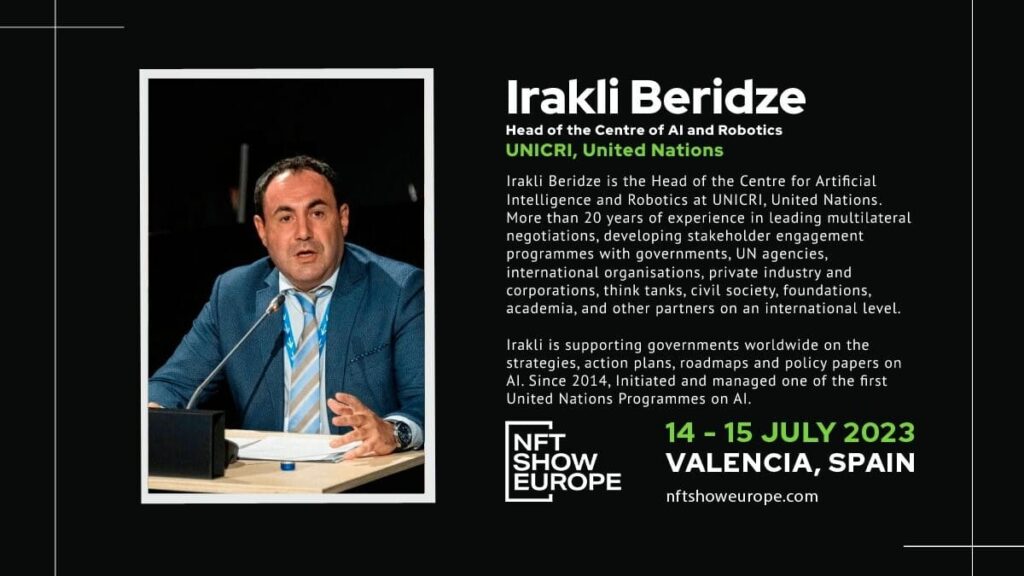

With over 20 years of expertise directing multilateral discussions and building international stakeholder engagement programs, Mr. Beridze has been essential in assisting governments throughout the world with AI strategies, action plans, roadmaps, and policy papers.

Mr. Beridze shares unique insights into the UN’s approach to AI, its potential influence on society and the economy, and the problems that must be addressed to enable responsible AI development and deployment in the following Q&A session.

We are delighted to announce that Mr. Irakli Beridze, Head of the Centre for Artificial Intelligence and Robotics at UNICRI, United Nations, has graciously responded to some questions on his expertise and work in the field of artificial intelligence (AI).

1. Your passion for technology and security is evident in your work at UNICRI. Could you share the pivotal moments or experiences that inspired you to delve into this niche and ultimately join UNICRI?

Thanks for your question! All my career, for last 23 years, I am working on the “duality” of risk and benefits of technology and its effects on security. At the start of my career, I was working on the issues related to the disarmament of chemical weapons, but to ensure that the benefits of peaceful use of chemistry is well understood and widely available in all corners of the world. Similarly, I worked on the risk mitigation of chemical, biological, radiological and nuclear weapons and capacity building for UN member states by supporting the development of the knowledge and expertise in the above fields in the wider world. For the last 8 years, I am in charge of the programs aimed at ensuring the exponentially growing frontier technologies, such as Artificial Intelligence, is used to solve crime related problems but doing so responsibly, in a human right’s compliant manner! the balance between the above duality is crucial for the technological development and security governance in general.

2. With the ever-growing landscape of cybersecurity threats, which ones do you consider the most pressing, and what innovative approaches can we adopt to counteract them effectively?

Firstly, there are different kinds of cyber threats, and different kinds of cyberattacks. One of the most common types of cyber attacks are ransomware attacks, which is a type of malware that encrypts a victim’s files and demands payment in exchange for the decryption key. It is a major threat to organizations of all sizes and can cause significant disruption and financial loss. One innovative approach to counteracting ransomware is to implement effective backups and disaster recovery strategies, which can help organizations to quickly recover from an attack without paying the ransom.

Phishing is another prominent cyberattack, where cybercriminals trick individuals into divulging sensitive information, often through ostensibly legitimate emails. Such an attack relies on an unsolved cyber threat; namely, social engineering.

Social engineering is a technique used by cybercriminals to exploit, not cybersecurity weaknesses, but rather human error. It can involve impersonation, deception, or the exploitation of trust. One innovative approach to counteracting social engineering is to provide training and awareness programs for employees, which can help to educate them about the risks and how to identify and respond to social engineering attacks.

However, other cyber threats which I deal with as part of UNICRI are various cyber crimes which are on the rise, particularly child sexual exploitation and abuse online. This crime is a major growing threat which has exponentially increased due to the development of technology, such as social media and online chat rooms. One of our main projects here at the Centre for AI and Robotics is facilitating the use of innovative technologies to combat this crime type, such as employing machine learning algorithms to identify and track down victims or perpetrators.

Overall, it is important to recognize that these cybersecurity threats require a comprehensive approach to address them effectively. This includes implementing secure-by-design technologies that prioritize security from the outset, innovative approaches such as the use of AI to detect and respond to threats in real-time, providing training and awareness programs, and engaging in public-private partnerships with law enforcement and other stakeholders to share threat intelligence and resources in order to investigate and prosecute cybercriminals.

3. In your experience, have you observed emerging technologies like AI or blockchain playing a significant role in combating cybercrime? How might these technologies redefine the future of cybersecurity?

Yes, I have observed emerging technologies like AI and blockchain playing a significant role in combating cybercrime. For instance, AI can be used to analyze large volumes of data and identify patterns and anomalies that may indicate cyber threats. Similarly, blockchain can provide a tamper-proof and decentralized ledger that can help secure data and transactions. Through my role as Head of the UNICRI Centre for AI and Robotics, I have talked to law enforcement representatives worldwide who demonstrate that AI is already contributing to and making a difference in many areas. The trick is to find the right area and application to maximize the potential of a specific AI tool.

For instance, from our work on the AI for Safer Children initiative we have seen first-hand that AI is already contributing to the fight against online child sexual exploitation and abuse by, for instance, cutting investigation times – facial recognition and object detection tools can help link files with the same victim or setting within a huge amount of data to build a case in an investigation. According to members of our network that are using these tools on a daily basis, the time spent on analyzing child abuse images and videos that used to take 1 to 2 weeks can now be done in 1 day. AI tools have also helped with backlogs, significantly cutting forensic backlogs from over 1.5 years down to 4 to 6 months. This is all time that can make a world of difference for victims.

These tools, however, cannot be used on their own – they simply aid human investigators in prioritization and time management. That is one example of why the way forward for AI in cybersecurity takes into account principles of responsibility and ethics in order to be more widely accepted and thus adopted, which in turn calls for new regulations and governance models to ensure that these technologies are used ethically and responsibly.

If the principles of responsibility are upheld, these technologies do indeed have the potential to redefine the future of cybersecurity by enabling us to detect and respond to threats more quickly and effectively.

4. Balancing security needs with individual privacy rights is a complex challenge in today’s digital world. What key obstacles have you encountered in this area, and how can governments and organizations navigate them?

You raise a very important question. Through the implementation of a project with INTERPOL and with the support of the European Commission, we have learnt a huge amount about pitfalls associated with the use of AI and the main challenges in addressing them.

Together, we are developing a Toolkit for Responsible AI Innovation in Law Enforcement – a practical and operationally-oriented guide aimed at supporting the law enforcement community to develop and deploy AI responsibly, respecting human rights and ethics principles. If we are to tap in to the true potential of AI, public confidence in the safe and ethical use of AI is an essential prerequisite for effective implementation and can only be achieved through steps taken by several stakeholders.

Firstly, technology companies should implement privacy and ethics-by-design right from the start of development, creating AI systems that are transparent, explainable, and accountable, such as by ensuring that AI systems are trained using unbiased data. Secondly, the end-users must incorporate human oversight into the decision-making process and be aware of its potential for bias and shortcomings, as well as be as transparent as possible to the public about how and when they are using AI. It is also important to engage with communities and stakeholders to ensure that their concerns about privacy and bias are addressed and to establish trust in the use of AI in law enforcement. Finally, governments must establish clear legal and regulatory frameworks that govern the use of AI in law enforcement.

The key to upholding privacy and other human rights is accountability and human oversight, accomplished by transparency in how these systems are developed and used. I’m not saying that we post any and all sensitive information for the public to view, but rather instate reliable assessments at every stage of developing innovative technology as well as regular reviews after deployment.

Ultimately, it is important to recognize that privacy and security are not mutually exclusive [we have heard from many angles that it cannot be a zero-sum game between privacy and security] and that a balanced approach is needed to both develop innovative solutions with privacy-by-design as well as protect the public from misuse of AI.

5. What role can international organizations like the UN play in bolstering cybersecurity and curbing cybercrime? How can these organizations foster more efficient collaborations with various stakeholders to achieve their objectives?

International organizations like the UN can play a significant role in bolstering cybersecurity and curbing cybercrime by providing a platform for information-sharing and collaboration among various stakeholders, including governments, private sector organizations, civil society groups, and technical experts. Its initiatives can furthermore provide technical assistance to countries that lack the resources or expertise to combat cyber threats effectively.

One way the UN can achieve these objectives is through the establishment of joint research initiatives, capacity building programs, and other collaborative projects that promote best practices and innovations in cybersecurity. The UN can provide a platform for member states to share information and best practices, develop common standards and norms, and coordinate responses to cyber threats. For example, the United Nations Interregional Crime and Justice Research Institute (UNICRI) specializes in crime prevention and criminal justice and, since 2017, has operated its Centre for AI and Robotics in the Hague. This Centre is specialized in the area of frontier technologies such as AI, both through researching the risks of abuses of these technologies by criminal actors as well as exploring the vast potential of AI and other tools to combat crimes.

UN auspices not only lends the global coverage needed for borderless cyber crimes, but it also helps ensure that UN principles and values are instilled inherently throughout its work. Its efforts to advance principles such as diversity and inclusion, human rights and the rule of law, and equality make the UN a truly trustworthy and global facilitator through which we can unite the efforts of diverse stakeholders. The principle of sustainable development further guides it to focus on more than temporary solutions, but rather with a long-term vision for sustainable capacity building for governments and the international community at large.

To that end, it is important for international organizations like the UN to engage with local communities and stakeholders to ensure that truly inclusive perspectives and concerns are taken into account when developing cybersecurity policies and programs. Ultimately, by fostering collaboration and coordination on cybersecurity issues, international organizations like the UN can help to promote a safer and more secure digital world for all.

6. We’re eager to learn about any intriguing projects or initiatives UNICRI has recently embarked upon in the realm of tech and security. Could you share some highlights with us?

The UNICRI Centre for AI and Robotics is involved in a number of initiatives and projects aimed at addressing the complex and evolving challenges at the intersection of technology and security. Here are some highlights of our recent work:

1. The AI for Safer Children initiative and Global Hub: The Global Hub is a platform exclusively available for law enforcement which provides numerous services, including a catalogue of existing software tools applicable at various stages of the investigative process, a Learning Centre and case study for guidance on how to implement these tools and how to do so responsibly. Finally, the platform also offers a communication section where investigators have the ability to connect with each other from all over the world and each share their own experiences about the use of AI in their work. To further build capacities, in 2023 the AI for Safer Children initiative will also offer specialized trainings free of charge, tailored to the specific needs and contexts of Global Hub members.

2. Toolkit for Responsible AI Innovation in Law Enforcement: a practical guide for law enforcement agencies on the development and deployment of AI in a human rights-compliant and ethical manner. Flowing from the first INTERPOL–UNICRI Global Meeting on Artificial Intelligence for Law Enforcement in 2018, its project was formally launched in November 2021 and the Toolkit is being rolled out for testing this year, 2023.

3. The Responsible Use of Facial Recognition in Law Enforcement Investigations: The deployment of facial recognition technology for law enforcement investigations around the world is arguably among the most sensitive use cases of facial recognition technology due to the potentially disastrous effects of system errors or misuses in this domain. Starting in early 2021, UNICRI through its Centre for AI partnered with INTERPOL, the World Economic Forum and the Netherlands Police to launch this initiative establishing responsible limits on the use of facial recognition technology in law enforcement investigations. This resulted in the release of proposed policy framework contained in the joint publication Policy Framework for Responsible Limits on Facial Recognition.

These are just a few examples of the exciting and impactful work that our Centre is doing in the realm of tech and security. Our goal is to continue to innovate and collaborate with partners around the world.

7. As attendees gear up for the upcoming event, what insights do you hope to impart through your talk? What pressing issues face the cybersecurity community at present, and how can we collectively devise strategies to tackle them?

The technological developments discussed today are extremely encouraging for us all. The power of AI is here to stay and we at UNICRI Centre for AI and Robotics are committed to harnessing its potential for the benefit of societies worldwide.

We look forward to developing into this conversation at #NFTSE 2023 joined by multiple other professionals within the expertise in a round table conversation.

As we continue to incorporate AI into every part of our lives, it is critical that technology is created and used in an inclusive, ethical, and egalitarian manner. We may get closer to realizing this vision of a future that optimizes the benefits of AI while limiting its risks and obstacles with Mr. Beridze’s advice and skills.

We appreciate Mr. Beridze’s time and ideas, and we look forward to further progress in the responsible development and deployment of AI in the coming years.

Don’t miss the opportunity to meet Mr. Irakli at #NFTSE and network with other leaders in the field.

Plan ahead and be aware of Early Bird ticket discount closing very soon on May 1st.

More about NFT Show Europe #NFTSE

NFT Show Europe is one of the world leading events on web3, Blockchain, Metaverse and Digital Art. An international meeting point for experts to share their insights on the next era of the internet in a futuristic business-art atmosphere.

Links:

#NFTSE 2023 Official Trailer: https://youtu.be/Rf9jazG1yE0

Website: https://nftshoweurope.com/

Twitter: @nftshoweurope